Ohad Shamir (Weizmann) - Training Neural Networks: The Bigger the Better?

Training Neural Networks: The Bigger the Better?

Artificial neural networks are nowadays routinely trained to solve challenging learning tasks, but our theoretical understanding of this phenomenon remains quite limited. One increasingly popular approach, which is aligned with practice, is to study how making the network sufficiently large (a.k.a. “over-parameterized'') makes the associated training problem easier. In this talk, I'll describe some of the possibilities and challenges in understanding neural networks using this approach. Based on joint works with Itay Safran and Gilad Yehudai.

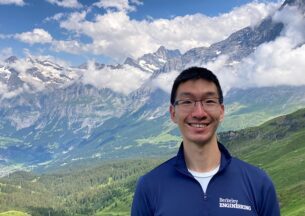

Ohad Shamir

Ohad Shamir is a faculty member at the Department of Computer Science and Applied Mathematics at the Weizmann Institute. He received his PhD in 2010 at the Hebrew University, and between 2010-2013 and 2017-2018 was a researcher at Microsoft Research in Boston. His research focuses on theoretical machine learning, in areas such as theory of deep learning, learning with information and communication constraints, and topics at the intersection of machine learning and optimization. He received several awards, and served as program co-chair of COLT as well as a member of its steering committee.